Online shoppers are getting antsy. They’re spending less time thinking about purchases before buying — or leaving a site — and drastically shrinking marketers’ opportunity time. We explore the latest trends in online personalization — and how to keep up.

We spend a lot of time talking about personalization in marketing. That’s because it’s both the most profitable way for our customers to leverage artificial intelligence and the most challenging. A new paper by Seth Earley, published in IEEE’s November/December issue of IT Professional, lays out some of these challenges and addresses how best to overcome them.“AI-Driven Analytics at Scale: The Personalization Problem” touches on many of the issues our customers face every day, including maximizing data scientists’ time, developing use cases in a timely fashion, and even using a Signal Layer to help expedite the process. It also cites a new study that examines exactly how much time companies must apply their personalization insights in a real-time setting.

After explaining the usual “too much data, too little time” conundrum, Earley shares an alarming statistic. The amount of money spent via online transactions will increase by 26 percent to $2.1 trillion in 2017. Yet the amount of time consumers will spend making those purchase decisions will shrink to just 4.9 to 6.9 minutes, depending on which device they’re using. This is down from a range of 5.5 to 7.6 minutes in 2016 (Source: StatIsta, 2017). Thanks to the increase in mobile device usage for online shopping, Earley points out, “Online retailers have more data for analysis and personalization insight, but the cycle time in which it can be applied is rapidly diminishing.”

While this is certainly true in personalizing customer engagement, the same can be said for inventory management, pricing, risk management, fraud detection, and many other areas. The time between generating insights and acting on them is shrinking.

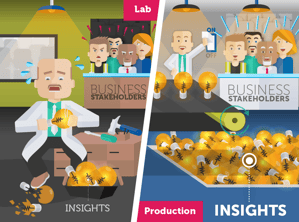

Companies must get faster and more precise when it comes to analyzing everything from basic demographics to sentiment and likelihood to recommend. They must quickly identify which content will be most effective in engaging customers with an omni-channel strategy, identifying the right tone, message, channel, product recommendation, and more for each customer in a matter of minutes. Even the best recommender engines, however, will miss details buried in the insights. Earley says these nuances will be “lost in translation from development to production or from data science teams to production analyst teams.”

As a company that builds such solutions and implements a Big Data platform for some of the world’s largest enterprises, we see the disconnect between development and production first-hand. Most companies are still struggling with simply identifying key insights and applying them to real-world use cases, such as identifying which customers are most likely to accept a given offer. Once a company gets that use case figured out and running consistently, it starts over with another use case, such as figuring out which customers are about to switch to a competitor.

There are two issues with this:

-

Companies that do this are not working “at scale.” Each use case is bespoke, and for each one, data scientists are starting at the beginning, identifying data sources, doing all the data prep work, and repeating every step in the entire process, redoing much of the exact same work they did for the first use case.

-

These companies are missing specific insights. While they may identify a handful of factors that contribute to a given use case, the insights they discover for one use case are not being carried through to subsequent use cases. An airline, for instance, might look at past purchase behavior to determine whether a customer is about to attrite. And the scientists may find patterns that could be indicators of attrition (such as being forced to sit in a middle seat, missed connections, lost luggage, or excessive delays). These indicators could be used for additional use cases, such as predicting which customers are about to call your customer service center, but discoveries from one use case don’t necessarily make it to the next — even though each insight might hold predictive qualities for multiple use cases.

So how do companies combat this and ensure their insights are consistently accurate, pulling in even the smallest details to help make a given prediction? Earley explains that the answer is standardization of processes and reuse of components and models. The best place to start is with a platform that can provide a taxonomy of insights that provides visibility into where each insight came from and how it’s currently being used. Such visibility allows everyone in the organization to get on the same page and helps companies break down department or function silos that are all too common in most enterprises today. Earley suggests building a “factory model,” designed from the ground up to deliver benefits at scale. In other words, these insights should not just be searchable, but reusable, like interchangeable parts in an assembly line.

We call these reusable components and models Signals. “The objective of a preprocessing approach,” he explains, “is to process the data independent of its future use and then apply artificial intelligence and machine learning in the signal layer to drive business application functionality.”

The Signal Layer sits in a company’s technology stack between the data layer and the application layer. It allows use cases to access its Signals, which are being constantly refreshed based on insights from new data. The Signal Layer hosts not only the Signals, but also the science and technology required to build those Signals.

Earley goes on to describe how a platform approach, “with a combination of systematic feature engineering and automated and system-assisted model building” can help companies create hundreds of predictive models faster and more efficiently than ever before. That’s because scientists need to go all the way back to the raw data layer to build each use case. But with a platform approach, they only have to return to the Signal Layer, where 80% of the work (namely data preparation) is already done. In addition, such a platform democratizes a company’s data science capabilities across the enterprise, making insights available to anyone who needs them.

Opera Solutions’ flagship product, Signal Hub, is a platform that does exactly what Earley explains, using artificial intelligence to build Signals, storing and refreshing those Signals in a Signal Layer, and making them available to anyone in the enterprise, for any use case. Signal Hub also includes a unique Workbench that allows data scientists to quickly develop new models and use cases, as well as a Knowledge Center, which allows business users and scientists alike to explore each Signal, finding its origins and examining the specific insights that helped create it.

To learn more about how Signal Hub can help your organization keep up with the rapidly changing demands and attention spans of consumers, download our paper, “Personalized Marketing at Scale.”

Laks Srinivasan is co-COO at Opera Solutions.

Laks Srinivasan is co-COO at Opera Solutions.