Data is the lifeblood of most organizations these days. The insights that come from a company’s data can help drive major — and minor — decisions, providing incremental boosts in company performance on a regular basis and even drastic boosts on occasion. But if you’re not seeing these kinds of results from your data, your problem might not be the analytics. It’s more likely that you’re missing key steps in preparing the data and ensuring its quality.

Proper data preparation ensures the ability to access both internal and external sources of data and transform these data sets into a form that’s ready for analysis. This might involve various forms of data transformation, including processes for improving data quality. Data scientists spend up to 80% of their time preparing data for analysis and ensuring its quality, leaving only 20% to do the actual modeling and analysis that deliver the relevant, actionable insights companies are after.

These numbers are not new, and they shouldn’t be a surprise to anyone reading this. But for those wondering what exactly goes into the process, why it takes up so much of scientists’ time, and why data prep and data quality are so important, we spell it out here.

Data Preparation

Data preparation is the process of manipulating data into a form that is suitable for analysis. “Suitable” in this case means that the data, regardless of its source, is clean, complete, and quantifiable. This is typically a tedious task that requires human intelligence to complete. To support the specific analytics goals for the enterprise, companies must take a strategic approach to the way they gather data from all the necessary, relevant, and appropriate sources. Identifying these sources is often part of the preparation process. If the data being collected won’t deliver the results the company wants, then the company must expand its data sources, often to places outside the enterprise.

Analytics typically includes internal data sources, such as transactional systems (for e-commerce sites), ERP systems, data warehouses, departmental data marts, and data lakes. Through extract-transform-load (ETL) tasks, data scientists can transform the data sets into a form that’s compatible with the needs of predictive analytics.

Here are a few important steps data scientists take to prepare data for analytics:

-

1. Streamline access to the data — Building a robust data pipeline requires a way to quickly and efficiently refresh data sets used for predictive purposes. Scientists must retrain machine learning algorithms to improve accuracy, and if the process of accessing critical data is cumbersome, the whole process suffers. As time goes on, an increasing number of data formats will become available, so the data access part of the pipeline should be flexible enough to accept new formats and structures.

-

2. Build an abundant data transformation toolbox — Over time, size (and complexity) of the company’s data transformation toolbox will grow. Common tasks such as sorting, merging, aggregating, reshaping, partitioning, and coercing data types need to be covered, but companies also need to consider supplementing data (e.g. adding longitude and latitude data for geospatial analysis).

-

3. Statistical analysis — During the data prep stage, scientists often need to perform exploratory data analysis to gain deep familiarity with the data. They’ll need tools for simple statistics such as calculating the mean, variance, and standard deviation, as well as tools for the analysis of the underlying probability distributions and variable correlations.

Data Quality

The value of predictive analytics is only as good as the incoming data and its preparation. The old adage of “garbage in, garbage out” still pertains to the era of Big Data. High-quality data is comprehensive, consistent, up to date, and error-free. The processes involved with data quality management (DQM) vary widely — and, we imagine, have an equally wide range of success. We learned of some of these DQM methods from 451 Research, which conducted a survey in 2016, asking 200 enterprise professionals how they managed data quality. The results shine a light on the shortcomings companies have with ensuring data quality as well as the importance of DQM tools in the enterprise.

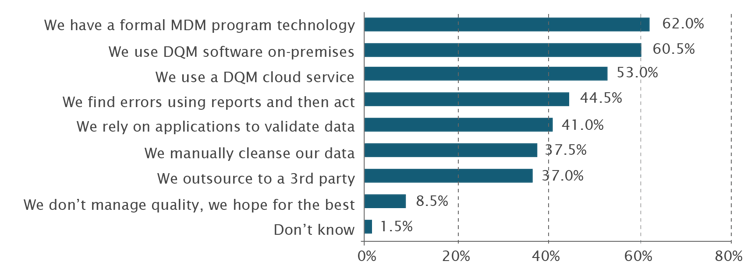

In the figure below, we see that enterprises employ a variety of ways to manage data quality — or fail to manage it in some cases. The chart below shows that 44.5% of participants said they find errors using reports and then act. Another 37.5% said they “cleanse their data manually” another unmotivated approach. A small but noticeable group, 8.5% of participants, said they don’t manage quality and just hope for the best. Fortunately, we see that a majority of participants indicate they use formal MDM program technology, and/or DQM software or cloud services to address data quality. These solutions are the preferred route for maintaining the health of enterprise data assets.

Figure 2: Means for Managing Data Quality

Source: 451 Research, January 2016

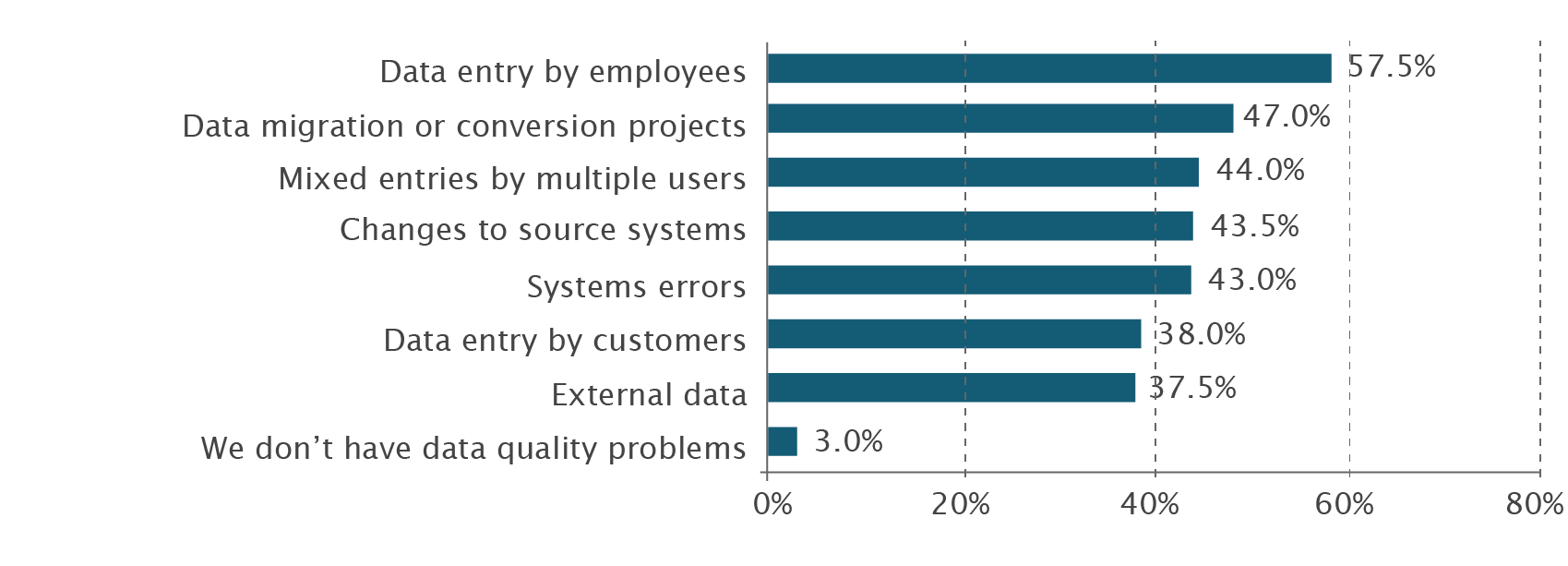

So what causes poor data quality? Human error, expectedly, ranked as the number-one offender. IT-related practices such as migration efforts, system changes, and system errors were also frequently cited. Surprisingly, however, 38% of participants cited their customers as the cause of data quality issues. Customer data entry usually involves an interaction with a Web-based application, Web service, or mobile app, all of which typically have integrated data validation to address error-prone data at the point of entry. Chances are, not enough planning or development went into building these participants’ enterprise systems, resulting in poor data quality from customers. Further, errors from external data sources (e.g. data feeds from large customers, partners, or even open data sources) are likely to increase as more organizations accelerate the sharing of data and services via API integration with their customers and supplier partners.

Figure 3: Causes for Poor Data Quality

Source: 451 Research, January 2016

The good news is that most participants report that their companies have already made data quality investments. Interestingly, 24% of the participants who have some solution already in place still see reason to evaluate new tools, such as enterprise-class data quality tools, master data management (MDM) tools, and data catalog tools. Such tools allow companies to take a proactive stance at data governance, making sure that their data is suitable for predictive analytics.

We also see indications that ROI is important in determining DQM success, with over 25% of participants claiming that DQM delivers a high ROI. Further, 80% of participants weighed data quality as a good investment that ranks high in importance. Determining ROI as a direct effect of DQM is rather subjective, however, since establishing a strong correlation between healthy data and increased sales does not necessarily mean causation. Still, it is a good indication that enterprises are taking data quality seriously by making an attempt to measure how their investment in this effort is paying off.

Data Quality’s Contribution to Business and Analytics

Because data and the insights derived from it can have far-reaching effects throughout the enterprise, it’s critical that scientists thoroughly prepare and check the data to ensure that it is complete, accurate, and up-to-date. And while senior management is generally shielded from the intricate details of the layers of technology and data supporting their decisions, they do have an appreciation for getting objective and reliable data and insights. Therefore, they must present a collective business case for Big Data and analytics projects to encompass data quality programs, sound ETL infrastructure, and a predictive analytics platform in relation to larger business objectives — especially to C-level decision makers. To leverage data as a strategic asset, businesses must build confidence in the data they already have. With high-quality data — and the confidence that comes with it — enterprises experience increased revenues, reduced costs, and higher productivity.

While no technology currently exists to replace the data scientist’s job of cleaning and normalizing data and ensuring its quality, the right predictive analytics platform can make sure they do that job just once. Opera Solutions’ Signal Hub creates and hosts reusable Signals, or valuable insights derived from the data, so that scientists don’t have to redo the data prep work that has traditionally required up to 80% of their time. For every use case run with Signal Hub in a company, scientists spend less time preparing data and more time building models that satisfy business objectives.

Download our Signal Hub Technical Brief to learn more.

Daniel D. Gutierrez is a Los Angeles–based data scientist working for a broad range of clients through his consultancy AMULET Analytics.