Artificial intelligence is no longer just evolving nomenclature in IT. With the technology’s recent progress, organizations of all shapes and sizes are taking interest. With the mainstream press and industry analysts from every corner weighing in, it is worth taking stock of the technology and learning how to differentiate between three arguably over-hyped terms: machine learning, artificial intelligence (AI), and deep learning.

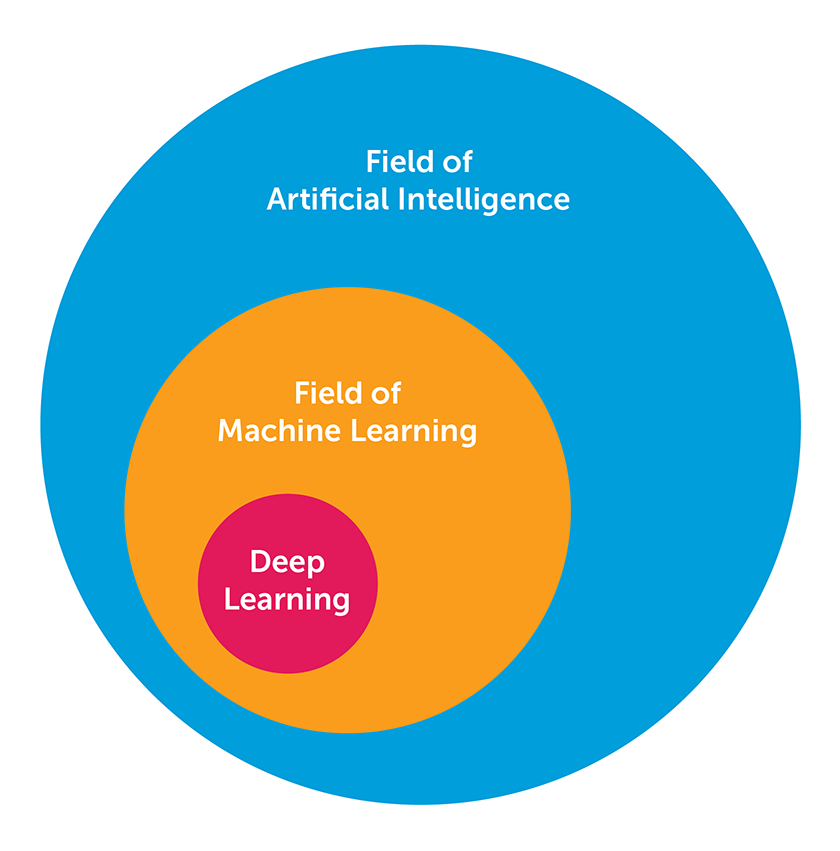

It’s best to consider the concentric model depicted in the figure below. AI is shown as the superset since it was the idea that came first, and it has been evolving and expanding since then. A subset of AI is machine learning, which came out of the quest of AI at an early stage. The innermost subset is deep learning, which is just one class of machine learning algorithms. Deep learning is a hot area right now, and the one most typically associated with the rise in AI today.

AI has been the great hope for computer science in many academic circles and think tanks since the 1950s when the term was first coined. Since then, AI has slowly progressed in fits and starts until around 2012, at which time its reach was mainly limited to the realm of advanced technology companies, governmental organizations, and research institutions.

More recently, however, AI has broken away from the hypothetical and into real-world business solutions. Much of this advancement is a result of the availability of GPUs (graphics processing units), which provide the cost-effective compute resources demanded by AI. The ascent of AI also is a result of big data and its rising volumes of data.

Machine learning, blooming in the 1990s, explores various algorithms to both make predictions and discover previously unknown patterns in large data sets. The modern machine learning algorithms are closely related to statistics learning and optimization.

Deep learning is a type of machine learning that uses artificial neural networks and is generally considered the main component of AI. Deep learning addresses the needs of contemporary applications such as computer vision for autonomous vehicles, image and video classification, speech recognition, natural language processing (NLP), and much more. Deep learning is unique in the large number of so-called “hidden layers” in the neural networks it uses. Machine learning, AI and deep learning are all here to stay and will be pervasive in our everyday life.

The Relationships between AI, Machine Learning, and Deep Learning

Let’s take a cursory look at the ways machine learning, AI, and deep learning relate to one another and provide a high-level definition of each term. We’ll start with AI.

AI has been over-hyped term in the past year or so. Many technology companies — and even some non-tech companies — choose to claim their products and services use some form of AI even though saying so is quite a big of a stretch. Fortunately, there is some significant reality to the importance of AI today and how it’s being used. We see it in our smart phone apps, our home management technology like Alexa, robotics, and even the autonomous vehicles being developed by the major automotive manufacturers and ride-sharing startups.

AI involves the use of artificial neural networks (ANNs). The ANN has been around for decades, but it’s been largely ineffective because the compute resources have only just recently achieved a level that can deliver on the promise of AI. The organization of the ANN is modeled after the human brain, specifically connected neurons. ANNs consist of three parts called layers. The input layer represents the data set used to train the network. The output layer delivers the prediction of the network. And the middle layer(s), also called hidden layers, deliver the power of the network. The data flows from the input layer, through multiple hidden layers, and finally to the output layer.

To use the ANN, however, computers must be able to process the large data set, or input layer, that’s used to train the network. Without this training, the degree of predictive accuracy will suffer. Until recently, computers couldn’t tackle data sets big enough to accomplish the accuracy levels required for practical use.

Machine Learning

Machine learning is considered a subset of AI. In fact, neural networks have been an important ingredient of machine learning for some time, but only recently became the focus of AI and deep learning. There are three general classes of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

-

Supervised machine learning is a statistical learning methodology based on the overarching requirement to make predictions. There are two main types of supervised learning: regression for making numeric predictions (e.g., predicting the cost of a home based on the number of rooms). The other type is classification, which makes categorical predictions such as whether an email is spam or not spam. Data sets for supervised learning are called labeled data sets since they include the variable that the algorithm is trying to predict. Labeled data sets are used to train the algorithm, so it will be able to make predictions with new data (outside of the training sets). Generally, larger data sets, when used to train supervised learning algorithms, will produce more accurate results. This is why the field of Big Data has been a boon to machine learning.

-

Unsupervised machine learning is a statistical learning methodology based on the need for knowledge discovery, i.e. finding previously unknown patterns in data sets. In unsupervised learning, there are no labels and hence no predictions to be made. Instead, techniques such as clustering (e.g. hierarchical and k-means, or the recent DBSCAN) are used to detect patterns that may prove useful in better understanding the structures in the data. Another unsupervised method called dimension reduction is used to reduce the dimensionality of a data set in order to simplify analysis. Such techniques include principal component analysis and autoencoder.

-

Reinforcement learning is a strategy-optimization method. It differs from the two above in that it requires feedback but not direct labels. It automatically explores an optimized strategy (taking optimized actions) in an environment to maximize a certain reward, such as driving a vehicle or playing a game. It came into the spotlight when Google’s AI program AlphaGo beat the 18-time world champion of the game of Go in 2016.

Deep Learning

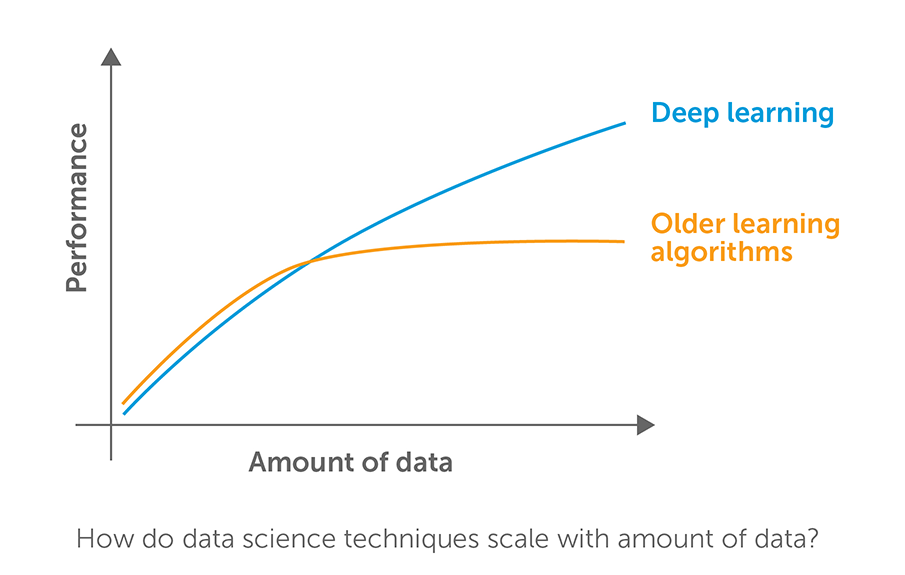

As outlined above, ANNs are the main vehicle for AI and therefore deep learning. There are some specialized versions, however, such as convolutional neural networks and recurrent neural networks, which address special problem domains. But scale is an important consideration with deep learning as well. Deep learning expert Andrew Ng gave a talk at ExtractConf 2015 where he commented, “As we construct larger neural networks and train them with increasingly more data, their performance continues to improve. This is generally different from other machine learning techniques that reach a plateau in performance. For most flavors of the old generations of learning algorithms, performance will plateau. Deep learning is the first class of algorithms that is scalable. Performance just keeps getting better as you feed the algorithms more data.” This effect is visualized in the figure below.

Many people new to the field of AI wonder where the “deep” part of deep learning comes from. The “deep” designation is used to indicate the depth of the ANN, so a neural network with many hidden layers is deemed to be deep.

Why Deep Learning

Source: Andrew Ng

One of the problem domains that has benefited from the rise of deep learning includes computer vision, which offers the new ability to train ANNs with large amounts of image and video data (high-dimension data sets). Deep learning algorithms can now be used for such tasks as real-time image and video classification that is an integral part of the software that makes autonomous vehicles possible. In addition, big-name companies like Facebook have found success with this technology, using it for for facial recognition with a high degree of accuracy. Other prominent problem domains served by deep learning include speech recognition and natural language translation.

The Future of Deep Learning

Early on in the advance of deep learning, unsupervised learning methods played the biggest role in raising interest. Since then, deep learning’s success has been overshadowed by the success of purely supervised learning methods. Many believe, however, that unsupervised learning will become far more important in the long term because it doesn’t require humans to label the data — a process that can takes years. The most promising deep-learning interest for researchers of late is generative adversarial networks (GANs), which consist of two deep learning network models — one of which generates candidates and the other tries to decipher which candidates are from the “true” data and which are from the generated data. We’ve seen promising results thus far (where generated data has been able to fool the other network), and if this approach is successful, it will open new doors for many broad applications in deep learning. Time will tell, but by all indications, AI, machine learning, and deep learning will be with us for some time to come.

Want to see artificial intelligence, machine learning, and deep learning in action? Download our white paper “Tactical Intelligence Derived from Social Media Sources,” which puts some of the principles discussed here to the test in the real world.

Qi Zhao is vice president of analytics at Opera Solutions.